- UID

- 20

- Online time

- Hours

- Posts

- Reg time

- 24-8-2017

- Last login

- 1-1-1970

|

So, do you claim we are alone in the universe?

▼ No. We claim wecouldbe alone, and the probability is non-negligible given what we know… evenif we are very optimistic about alien intelligence.

What is the paper about?

The Fermi Paradox– or rather the Fermi Question – is “where are the aliens?” The universe is immense and old and intelligent life ought to be able to spread or signal over vast distances, so if it has some modest probability we ought to see some signs of intelligence. Yet we do not. What is going on? The reason it is called a paradox is that is there is a tension between one plausible theory ([lots of sites]x[some probability]=[aliens]) and an observation ([no aliens]).

Dissolving the Fermi paradox: there is not much tension

We argue that people have beenaccidentallymisled to feel there is a problem by being overconfident about the probability.

The problem lies in how we estimate probabilities from a product of uncertain parameters (as the Drake equation above). The typical way people informally do this with the equation is to admit that some guesses are very uncertain, give a “representative value” and end up with some estimated number of alien civilisations in the galaxy – which is admitted to be uncertain, yet there is a single number.

Obviously, some authors have argued for very low probabilities, typically concluding that there is just one civilisation per galaxy (“theschool”). This may actually still be too much, since that means we should expect signs of activity from nearly any galaxy. Others give slightly higher guesstimates and end up with many civilisations, typically as many as one expects civilisations to last (“theschool”). But theproperthing to do is to give a range of estimates, based on how uncertain we actually are, and get an output that shows the implied probability distribution of the number of alien civilisations.

If one combines either published estimates or ranges compatible with current scientific uncertainty we get a distribution that makes observing an empty sky unsurprising – yet isalsocompatible with us not being alone.

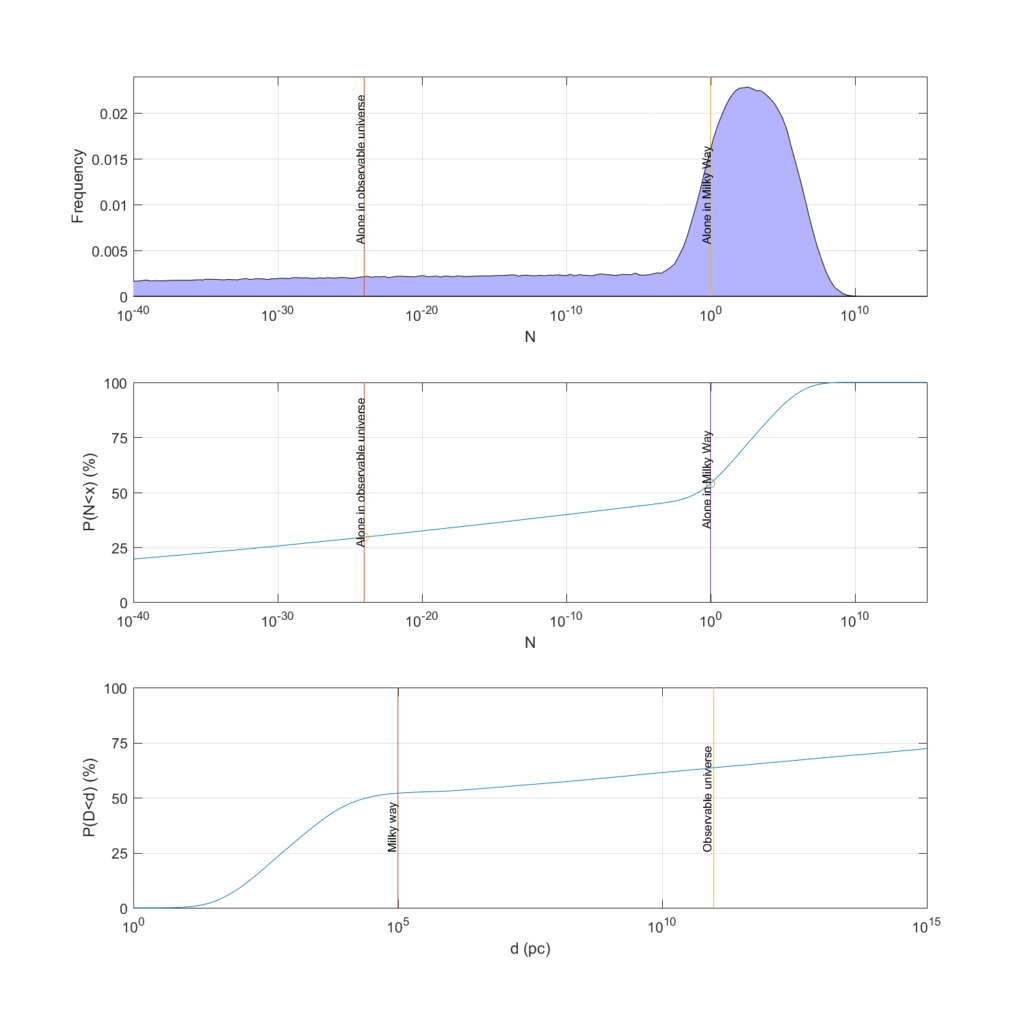

The reason is that even if one takes a pretty optimistic view (the published estimates are after all biased towards SETI optimism since the sceptics do not write as many papers on the topic) it is impossible to rule out a very sparsely inhabited universe, yet the mean value may be a pretty full galaxy. And current scientific uncertainties of the rates of life and intelligence emergence are more than enough to create a long tail of uncertainty that puts a fair credence on extremely low probability – probabilities much smaller than what one normally likes to state in papers. We get a model where there is 30% chance we are alone in the visible universe, 53% chance in the Milky Way… and yet the mean number is 27 million and the median about 1! (see figure below)

This is a statement about knowledge and priors, not a measurement: armchair astrobiology.

(A) A probability density function for N, the number of civilisations in the Milky Way, generated by Monte Carlo simulation based on the authors’ best estimates of our current uncertainty for each parameter. (B) The corresponding cumulative density function. (C) A cumulative density function for the distance to the nearest detectable civilisation.

The Great Filter: lack of obvious aliens is not strong evidence for our doom

After this result, we look at the Great Filter. We have reason to think at least one term in the Drake equation is small – either one of the early ones indicating how much life or intelligence emerges, or one of the last one that indicate how long technological civilisations survive. The small term is “the Filter”. If the Filter is early, that means we are rare or unique but have a potentially unbounded future. If it is a late term, in our future, we are doomed – just like all the other civilisations whose remains would litter the universe. This is worrying. Nick Bostrom argued that we should hope we do not find any alien life.

Our paper gets a somewhat surprising result:when updating our uncertainties in the light of no visible aliens, it reduces our estimate of the rate of life and intelligence emergence (the early filters) much more than the longevity factor (the future filter).

The reason is that if we exclude the cases where our galaxy is crammed with alien civilisations – something like the Star Wars galaxy where every planet has its own aliens – then that leads to an update of the parameters of the Drake equation. All of them become smaller, since we will have a more empty universe. But the early filter ones – life and intelligence emergence – change much more downwards than the expected lifespan of civilisations since they aremuchmore uncertain (at least 100 orders of magnitude!) than the merely uncertain future lifespan (just 7 orders of magnitude!).

So this is good news: the stars are not foretelling our doom!

Note that a past great filter does not imply our safety.

The conclusion can be changed if we reduce the uncertainty of the past terms to less than 7 orders of magnitude, or the involved probability distributions have weird shapes. (The mathematical proof is in supplement IV, which applies to uniform and normal distributions. It is possible to add tails and other features that breaks this effect – yet believing such distributions of uncertainty requires believing rather strange things. )

Isn’t this armchair astrobiology?

Yes. We are after all from the philosophy department.

The point of the paper is how to handle uncertainties, especially when you multiply them together or combine them in different ways. It is also about how to take lack of knowledge into account. Our point is that we need to make knowledge claims explicit – if you claim you know a parameter to have the value 0.1 you better show a confidence interval or an argument about why it must have exactly that value (and in the latter case, better take your own fallibility into account). Combining overconfident knowledge claims can produce biased results since they do not include the full uncertainty range: multiplying point estimates together produces a very different result than when looking at the full distribution.

All of this is epistemology and statistics rather than astrobiology or SETI proper. But SETI makes a great example since it is a field where people have been learning more and more about (some) of the factors.

The same approach as we used in this paper can be used in other fields. For example, when estimating risk chains in systems (like the risk of a pathogen escaping a biosafety lab) taking uncertainties in knowledge will sometimes produce important heavy tails that are irreducible even when you think the likely risk is acceptable. This is one reason risk estimates tend to be overconfident.

Probability? (▪ ▪ ▪)

► Read the full note here: Source

• More info, HERE |

|